Pitfalls of Graphic User Interface Test Automation and How to Overcome Them

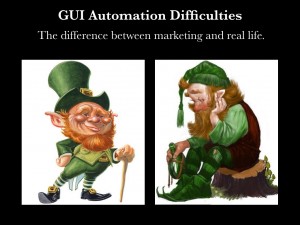

Usually, it is a good practice to automate any kind of routine. In testing, automation is vital. It helps decrease costs, makes testing tasks faster, and improves the efficiency of your staff. It is a “no-brainer” to think about automating different testing tasks, especially when reading marketing materials or listening to a sales presentation of a “leading-edge-premier” robot software. Those reports are usually based on success stories from the world of the most complicated user interfaces – CAD software, graphics software, or maybe some examples of extremely complex but good web design. Moreover, usually, these reports are true. But you should think carefully before starting a GUI test automation project. In many areas of software development, GUI automation is not so easy and obvious, and your entire automation project can quickly turn into a disaster, despite the visual attractiveness of the tools.

Beware

First, let’s understand where GUI automation can fail:

- Your project is a distributed system, which consists not only of a GUI-side, but also of a server-side, and maybe, some

middle tier - Your GUI-side is built on highly customized (skinned, smart,

etc ) controls - You not only want to act upon your

software, but also verify the result of your action - You can’t “save” the result of your session in the GUI[1]

If any of these points are applicable to your GUI automation project, continue reading.

Custom Controls

In a world where look and feel in software are key for successful marketing, customization of standard GUI controls set is a must. Nothing should stop you from making your controls more attractive to the user, and skinning is one of the easiest ways to bring a fresh look. Some of the features that make your controls smarter (such as autofill, or tree-view structures in initially flat-structured controls), may deliver more clarity and usability to the user, but may also become a nightmare for your automation team. Modern robots are usually technology independent or at least compatible with all common programming languages (they will work equally well with Microsoft .net interface, or java Swing/SWT GUI) – but usually they can only easily recognize standard controls. Even if you just added some background skinning to the standard ListView control (i.e. inherited from a standard class), you still have a risk that this customization will lead to the manual mapping of such a control. In this case, you’ll be forced to explain everything about this control to the robot. This is particularly important when you need to find some element of the control to click on, or invoke the context menu, etc. While this is not a show-stopping problem, it can have a serious impact on the automation effort.

Another problem, specific to Swing interfaces, is the dynamic controls naming. Very often, programmers do not make an effort to name each and every control in their interface, letting java machine do this work for them in runtime. In this case, GUI automation becomes impossible until you agree on a strict naming convention, and force the developers to implement it.

Validation

To automate an action or even long sequences of actions in the interface is usually a simple task, which doesn’t even require special programming skills. You just use the robot’s built-in recorder and that’s it. Easy – and it works, too. But in testing, actions are frequently performed to validate the output of the software, and this is where things get complicated. If you are strictly using standard controls with which the robot is familiar, and your result is a highly predictable number or string not containing any variables, the task can still be performed with virtually no programming (robots have a complete set of built-in comparison tools for that).

If your software has some kind of “save” or export function, you can use the full range of external comparators (including your own utilities) to validate large data sets or complicated file formats. But what if your controls are not readable by the robot? Then the resulting number may be seen on a screen, but may not be easily reached by the robot, because it might have been rendered on a custom-label interface element chosen for its popularity among your programmers and your software fans.

Most robots have a built-in bitmap comparator, which can be useful in certain cases – such as making screenshots and comparing them to a reference screenshot – but this is not the best way to compare numbers. Imagine if an output value is calculated from several different values displayed in runtime simultaneously on the screen, and cannot be predicted in advance. Since an automation script should be highly reusable, and any bitmap comparison depends on factors such as screen resolution, interface layout, font sizes, etc, this is a strong argument not to use this method.

Some robots provide special libraries, plugins, etc, that can be injected into your software during the build (compilation) process. While this gives you access to a variety of controls, in 99% of cases you will not want to provide such a build to the end-user, because it’s not the best decision from either security or performance standpoints. It forces you to test one build and deliver another one, which is also not the best idea. Moreover, this kind of injection, will not guarantee you 100% success in reaching the information inside your controls.

Thick Client

All of the problems described above can be annoying, but you can still deal with them one way or another. The next level of complexity is brought by a client-server architecture with a thick client concept. Usually, a thick client needs a connection to the server-side, but performs many actions on its own – such as asynchronously requesting and receiving data from the server.

The asynchronous nature of those operations can often be addressed by queuing all the actions performed by the testing script – inserting delays, wait functions, and other result-catching activities. But while this process establishes an artificial synchronization between client and server, it also slows down test execution significantly. Now imagine if, in addition to this, the application under test has some triggers that cause overlapping, modal windows, messages, warning panels, tabs, etc., after the data is pushed from the server.

All this causes uncertainty to your test script, and the script creator is forced to predict and manage all possible actions. Moreover, some of those windows and messages can inform the end-user that some vital conditions of the application execution have changed, for example, that connection to the server has been lost. In this case, you’ll need to add some level of heuristics to determine all the correct conditions, or just accept that all subsequent actions will fail.

While trying to manage the many possibilities of behavior of such an application, it may become logical to take full control over the server-side during script execution. At this point, you may suddenly face the fact that your robot doesn’t have enough power to do that, or that the tools you already have for this control are not compatible with the robot, and that it will be a significant effort to fix that. And since this effort was not initially planned it will surely extend your project timeframes and costs.

At this stage your test script will become a complex and long-lasting routine, requiring optimizations, splitting, and parallelism to gain durability and save execution time. Accomplishing these optimizations is closely related to the testing environment.

Environment

Execution facilities for testing are a very important aspect of any testing process. Automation puts some additional requirements on this environment. Virtualization technologies and cloud computing have changed substantially in testing lab deployment, and today it’s possible to set up a flexible testing environment in a corporate or even public cloud within minutes. But this usually implies remote access to the virtual hosts.

The question is how will the robot survive when the remote connection is down. Of course, it will depend on many factors, including OS, remote connection tool, its settings, etc., but as practice shows, robots are helpless when the whole desktop and mouse context are suddenly gone. The most common mistake is to underestimate these environmental issues at the very beginning of the automation project.

This leads to very “creative” solutions on later stages, when the simplicity of the script and parallel execution and other processes aimed at the durability of the script, are totally offset by the overcomplicated and unstable configuration of the test lab.

In conclusion, a decision to start an automation project, or buy a new super-clicking robot, can’t be made just by looking at the robot’s list of features. Software, which is supposed to be tested, needs proper analysis and skilled resources assigned to its project. The following can be used as a control checklist:

- What kind of controls are used in the target application? Does the tool support them? How many modifications are made to these controls? How difficult is the controls’ mapping process inside a robot?

- The robot can act, but how is the result going to be validated? Is all the data required for this purpose available to the robot?

- Can the robot itself control the backend? Do I have the tools to control the backend? Can these tools be easily synced with the robot?

- Do I have enough programming resources for that kind of project? (Just recording and replaying is a myth!) Is the application itself ready for automation? What exactly is required to make it ready?

- What is the application’s lifecycle? Would it be possible to execute the final test script at least 10 times? Is it possible to split everything into smaller parts and execute them in parallel?

- What environment will be used for testing? In case of virtualization/remote connection – is the robot compatible with the technologies which are going to be used?

If all of your questions have been answered, go ahead and start your own automation project, it has a good chance of being successful.

[1] In some programs you do not produce any storable result as in word-processors or graphics editors, they are built just for session-based work. For example: trading software, some games, or in the simplest case – calculators.