Maintaining Trading Platforms Uptime Through Turbulent Markets

Software development, architecture, and testing principles that Devexperts adhere to.

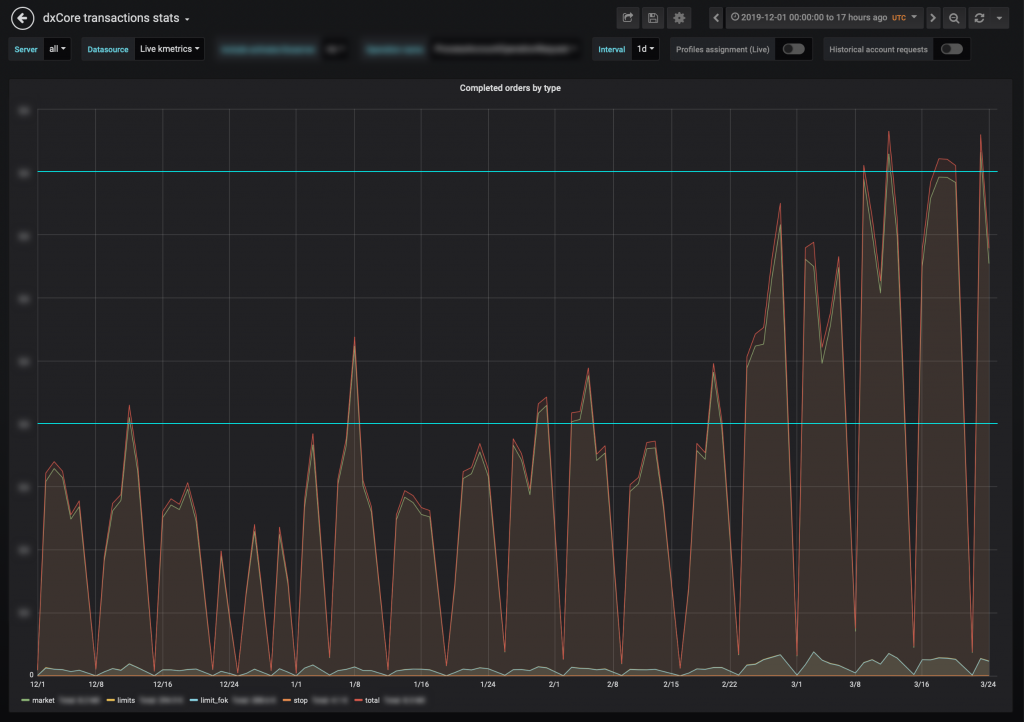

The novel coronavirus and its attendant disease, Covid-19, have been wreaking havoc on global markets. Since the end of February, we have witnessed the S&P 500 undergoing its fastest ever decline from an all-time high, as well as the largest drop in the price of crude oil since the first Gulf War in 1991. All major global indices are now down well over 20%, the official criterion for entry into a bear market, with volatility across FX, gold, oil, and stock markets being at multi-year highs. This has led to many brokerages across a variety of asset classes reporting greatly increased trading volumes.

Even the most robust of trading infrastructures get tested to their very limits when volumes surge and volatility spikes. There have already been several high-profile venues from both the institutional and retail spaces experiencing outages as their systems struggle to handle the increased load.

What steps can brokers take to mitigate the strain on their systems during such times? How can they best prepare and future-proof their businesses for such occurrences going forward? We asked our team of engineers to share a few of their insights.

Front End Optimisations

A highly-scalable architecture of a trading platform should be, at least, tolerant to bursts in market-data. During times of high market volatility, it’s reasonable to expect the amount of market data to be up to 10 times higher than normal, causing overload to servers and network channels.

At such times, it’s imperative that transport protocols are in place to prioritise traffic. This allows for the most critical functions of a trading platform, such as the opening, closing and editing of positions, to be assigned a higher priority over less critical functions such as the streaming of market data.

In order to achieve this a “latest snapshot” may be employed, so that only the most recent market data is made available, as the end trader is interested only in recent quotes, not the quotes that were valid a couple of milliseconds ago. Price quotes can also be conflated by removing duplicates and quote queue length can be shortened by intelligently removing redundant portions so as to temporarily relieve strain on both the network and servers.

At Devexperts we have overcome such issues through the development of custom transport protocols using the publish-subscribe messaging pattern, which allow for such prioritisation to be conducted at scale when unforeseen market events lead to surges in activity and their resultant bottlenecks. The less data you send (only what the trader wants to see right now), the less load is placed on the network and servers.

Back End Optimisations

However, the above optimisations only apply to user interfaces. The demands on the back end are obviously far higher as all incoming market data must be checked against real-time order instructions, existing positions, price alerts, and any pending orders. Even though this is unavoidable, the process can be made more efficient. Here are some essential best practices:

- Arrays and lists should be used in method calls, thus saving on the number of method calls. Instead of having onQuote(Quote q), your method signature should read onQuotes(List<Quote> q).

- Also, opt for garbage-free market data libraries where possible as garbage collection can be costly in terms of CPU cycles. Some kind of cyclic buffers are a must to save CPU time.

- Use read-only objects in the entity framework, which allows for the same copy to be reused, with versions to distinguish between different object instances.

A brokerage’s trading infrastructure should be, at the very least, temporarily tolerant to the kinds of surges in market activity that take place during Black Swan events. Events such as those of the 2008 financial crisis, the Swiss National Bank flash crash of 2015, or the current coronavirus crisis only occur once every few years. Regardless of their infrequency, brokerage architectures should be over-engineered to handle loads in excess of what the business regards as its maximum required capacity.

Modularity is important as well as deployment. The platform owner should have the ability to add more hardware if the need arises. If a server on a single node is lagging under high pressure, it ought to be modularised. The most heavily loaded part can be extracted to a separate instance and it can be scaled to several such instances. The part that is not under heavy load may reside in a single node as it was previously. Using a microservice architecture in software development allows for this to be done easily. In this way, a balance may be achieved between deployment costs, product installation, and performance.

Testing

The subject that our engineers were the most adamant on was that of testing. Evidently this is not something that can be done on the fly while a crisis is unfolding. Nevertheless, they were vocal about this being the area where many businesses cut corners when in a rush to ship a product. This, according to them, is what lets brokerages down when market events arise to test their systems in ways that they neglected to prior to launching.

Insights on testing and monitoring:

- Thorough stress-testing before the release in order to spot and fix problems which users may encounter at times of high load. Know your limits.

- Monitor client activity in order to determine both the maximum number of simultaneous users and the maximum number of trades that may be placed in a given time.

- When stress testing brokerage systems, the maximums observed during monitoring should be taken as averages and doubled for the purposes of testing. This allows for significant headroom in the event of a Black Swan.

- Systems should be loaded to maximum capacity and pushed beyond this in order to determine both the loads at which performance deteriorates and for how long the system can operate under a specified load without needing to be restarted.

- Business critical functions should be insulated from frequent changes for all of the above reasons.

- Devote most of the tests to the functionality critical to the business. Don’t add changes if you are not confident in them.

Conclusions

The main takeaway here is that failing to prepare is tantamount to preparing to fail. Though no system can be completely impervious to unforeseen events, sufficient resources ought to be dedicated at the outset, during architecture, development and testing phases. This is so that when the unknown becomes known, your maintenance and support department won’t be running around putting out fires during times of unexpectedly high market volatility. These things do happen with an alarming regularity even though it’s human nature to pretend that they don’t and they cannot be prepared for in a day. The moral of the story? Cutting corners only leads to going in circles.