Market Data Costs Are Soaring: How to Reduce Them

The Data Deluge

If there’s a trend to be observed across all industries and walks of life, it’s that data is eating—or more accurately flooding—the world. Last year it was estimated that by 2020 the amount of information in the digital realm would reach 44 zettabytes. That’s forty times more bytes than there are stars in the observable universe. Quite a sobering statistic, you’ll agree. Now, what percentage of that data can be accounted for by cat videos alone, we’re not completely sure.

A similar trend is observable in the industry that we’ve been following most closely at Devexperts; that of online trading. Where the cost of procuring, managing and storing market data was once so negligible that it hardly factored into the business strategies of most brokerages, today there’s an entire cottage industry growing up around helping brokers to optimise this most vital aspect of their businesses.

The online FX industry has gone through a number of notable shifts in recent years. You may have noticed many established brands trying to shake off the “FX” part of their names in favour of “Markets” in recent years. The first forays they made into expanding beyond the usual major, minor and exotic currency pairs was the addition of precious metals and energy to their respective offerings well over a decade ago. This was followed by stocks, indices, futures, bonds and most recently crypto assets and ETFs. Each new addition of an asset class brings with it bundles of symbols that have to be integrated, transmitted and stored.

This shift to multi-asset brokerage, the concomitant popularisation and growth of the industry itself, as well as developments in algorithmic and high-frequency trading, have all multiplied the amount of data that brokerages have to contend with. More instruments, more traders and more transactions have led to spiraling data costs. This is where the business of data vendors lies, but where brokerage businesses is dependent on vendors. As an example, dxFeed, our market data subsidiary, currently stores petabytes of market data. Through the course of our work in the industry, we’ve identified several key inefficiencies in the management of this data and we’d like to share some of our insights at this time.

Perform Regular Audits

Today’s flood of market data is forcing many to devote at least some operational bandwidth to market data management. It may seem counter-intuitive to suggest that a broker wishing to cut costs should devote extra resources to this, but you can’t optimise something that’s costing you money without taking the time to understand why and how. Particularly when it comes to an area as business-critical as market data. Market data management should be part of every brokerage’s overall strategy. This involves performing regular usage audits in order to identify which vendors are critical to the business, which have raised their prices and what alternatives there are in the market. Is there an overlap between vendors? If so it’s important to identify redundancies. Is your business paying for packages that you don’t need and aren’t using? Are long term agreements really saving you money or locking you down in an ever-changing market where vendor flexibility could be a competitive advantage?

Optimise What You Have

Once you’ve taken stock of precisely what data you’re receiving from which vendors and made cuts to account for redundancies and unnecessary streams, its time work on making the most of the data that you’re actually paying for. There are many brokers who still stream unrefined market data at extra cost to their businesses. Employing compression algorithms to compare feeds and filter price spikes that vary within a given percentage, will almost certainly add value to the data that you’re already paying for. It will also improve the experience of trading with your firm as spikes wreak havoc on the best laid plans of the majority of your traders, needlessly triggering limit and stop-loss orders without there having been a fundamental change in trend.

Cost of Service

Your greatest savings are likely to be made by focusing on the costs of your subscriptions. This is where competent third parties can come in to offer technological solutions that can radically cut costs. Such providers are capable of supplying data that approximates the original real-time exchange streams. This massively reduces exchange costs as the method employs derived data that cannot be used to reconstitute original tick data.

Additionally, opting for a third party provider will often allow for much more control over the symbol data that you pay for. The packages of most of the existing big players can be inflexible, requiring you to purchase minimum sets of symbols, some of which may not be of interest to your traders. Advanced third parties will allow you to take advantage of a pick ‘n’ mix approach, where you can select the symbols that you require and only pay for those.

Cost of Storage

As retail traders become more sophisticated in their understanding and analysis, having access to accurate price histories becomes all the more important. How far price histories go is usually determined by IT budgets but this need not be the case. A competent third party provider will have options that can be tailored to the needs of your traders. These include historical price data that can be streamed on-demand, as well as detailed market replay data that’s required by advanced clients who wish to backtest and optimise their trading bots.

Cost of Transmission

Retail brokerages are usually hamstrung in this regard as the way they distribute data is not in their control when using a third-party platform. There are a host of technological hacks can be employed to optimise transmission costs and existing platform providers resort to them with varying degrees of effectiveness.

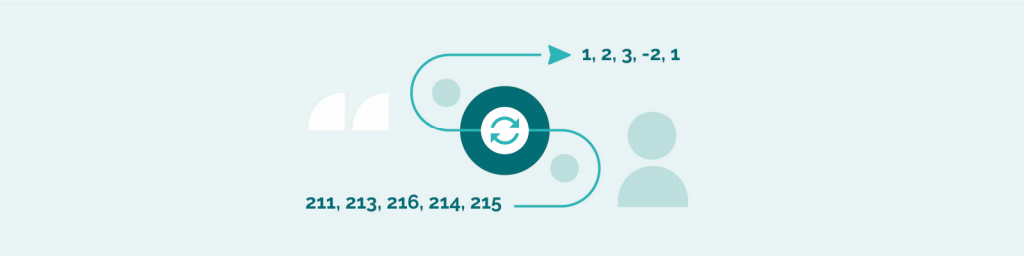

Quote conflation and removal algorithms sift through the tick-by-tick data, smoothing it by not transmitting duplicate ticks and removing quotes that are no longer valid, in addition taking bandwidth into account. In other words, if the incoming stream reads as follows: “aabbbabc,” after quote conflation it will look more like: “ababc.” The data forwarded on to clients appears identical to the real world market data with duplicates and redundant quotes having been removed. This can free up much needed bandwidth and reduce latency, especially during times of high volatility.

Delta-encoding is another method by which streams of data can be transmitted and saved in the form of differences (or deltas) between sequential values, rather than sending the entire stream of values as is, complete with their redundancies. Delta-encoding reduces the variance of the values to be transmitted and stored. So, instead of a stream of price ticks that appear as follows: “211, 213, 216, 214, 215”, after delta-encoding they would look like this: 1, 2, 3, -2, 1. By only transmitting the differences, delta-encoding reduces the number of bits required to send and store historical market data.

For incumbents in the space, optimisations in this area are only going to be relevant if you’re in the process of rethinking your existing platform strategy, or if you have already partnered with a provider who places a premium of customisability and is willing to work with you to achieve the efficiencies that your business requires. Newcomers to the space have a much broader set of options available to them than the brokers of even a decade ago. They will have to carefully balance the cost and time requirements of developing a proprietary platform from scratch, or to partner with a third party that has the experience and willingness to tailor its existing product line to their needs.

Final Thoughts

These are just a handful of ideas and optimisations that we’ve been helping brokerages with, both at Devexperts and at dxFeed. Of course, each brokerage business has its own unique set of pressures and inefficiencies to be identified and addressed. However, one thing that we’ve found to be true almost completely across the board, is that where market data are concerned, there’s almost always the scope for improvements to be made, efficiencies to be gained and money to be saved. We’re committed to continuing this work as the market data industry evolves and the volume of data continues to grow. We’d be delighted to dig into the weeds with you and look at the specifics of how your business is managing its own market data needs. It’s kinda what we do here. So please feel free to contact us for a consultation and market data audit, you may be surprised with how many potential savings there are lurking under all that data.